The Python tool: works with JSON.

Our research challenge: get the data, and generate datasets.

This Python tool is able to gather and organize these metadata from the raw JSON output.

RESEARCH PROCESS Big thanks to Lanfei Liu, who built a tool in Python using Springer Metadata API and other APIs in order to generate literature review data. By learning her code, I figured out how to use Python 2 to gather data from the JSON output filtered by attributes (technically, this is called "query"), and create (write) CSV files for the output in table view.

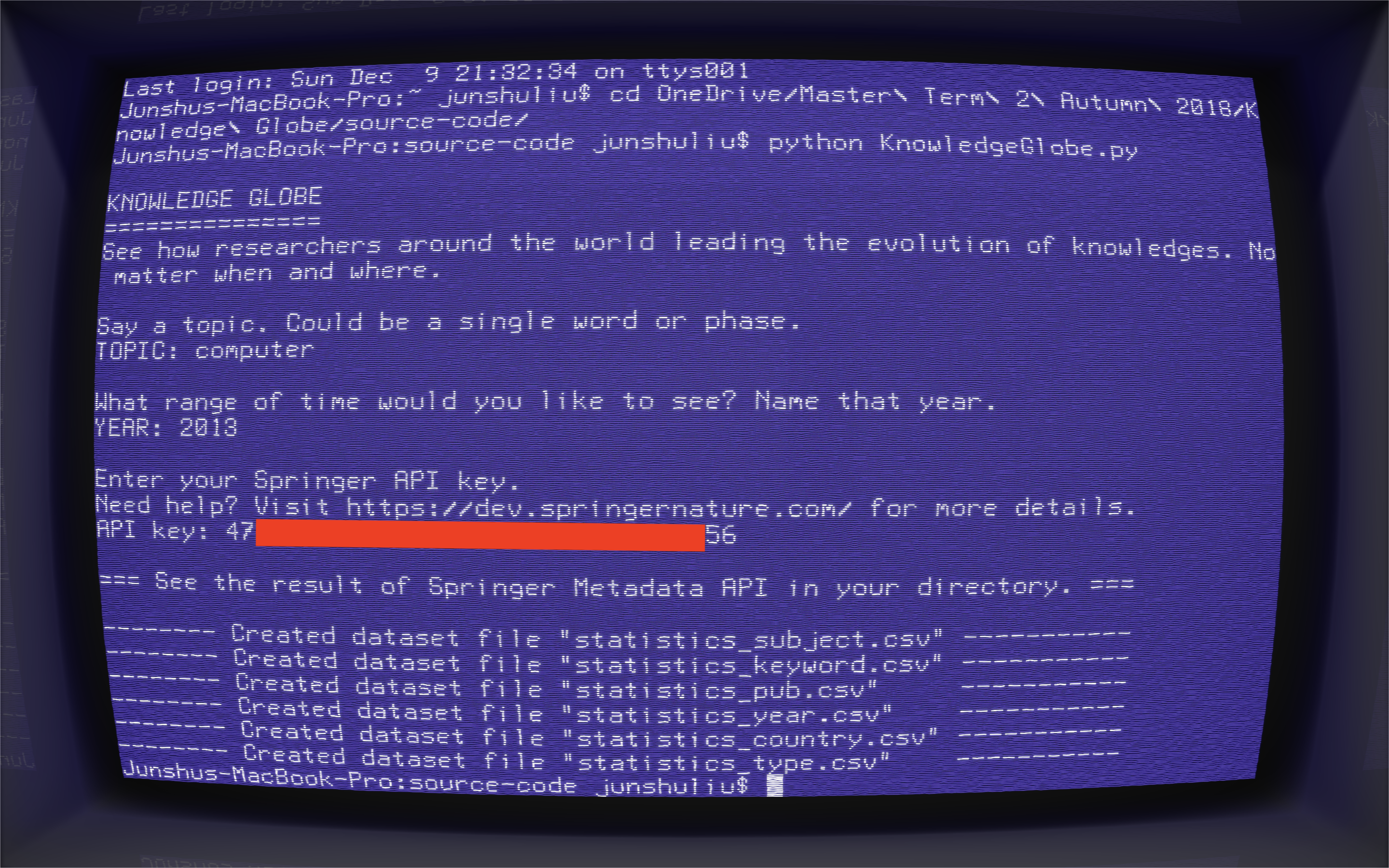

See our source code in Python 2.

A simple interface in the terminal will ask users the Springer Nature API key, the keyword, and the year (optional). After user's input, those 6 datasets will be generated and being saved to a new folder. The folder name is your keyword (and the year, if applicable).

After getting data, the Python tool will create 6 datasets for each element in “facets”. The dataset consists in two columns, “count” and “value”. For each attribute, the Python tool will make two lists for “count” and “value” and then generate a CSV file. Each column contains data for each list.